Difference between concurrency and parallelism

Introduction

Concurrency and parallelism are often used interchangeably in computing, however they do not necessarily mean the same thing. If you Google concurrency vs parallelism, you will find different articles explain it differently. I think part of the confusion comes from the way people define these terms. In this post, I am not going to add more to what other people have said, however I will try to rephrase it using simpler words so that it is easy for beginners and students.

So what is the difference in plain English?

Concurrency vs Parallelism

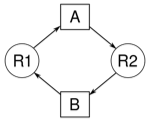

I noticed that some people refer to concurrency when talking about multiple threads of execution and parallism when talking about systems with multicore processors. This is a nice approach to distinguish the two but it can be misleading. For example, a multi threaded application can run on multiple processors.

An another way to make the distinction between the two is to think of it in terms of

- Concurrency: when referring to independent but related tasks running at the same time (threads are a good example)

- Parallelism: when referring to complex tasks divided into smaller logical subtasks

You can have an application with multiple threads of execution (ex. one thread downloading a file and another thread updating the GUI). These threads can run virtually at the same time on a single processor using time sharing or really at the same time using multiple processors. In both cases, this is an example of concurrency. Also talking about threads, concurrency is mentioned when managing thread access to a shared resource.

Now imagine you have a task that counts the number of lines in a file. You can split the file into smaller files. Count the number of lines in each small file. Add all sub counts to get the grand count. You can do this operation in parallel if each count sub task is done by a separate core on a single machine or distributed on multiple machines over network (ex. Map reduce). In either case, this is parallelism.

Sometimes, we may encounter the term vector computing. This is directly related to parallelism. Instead of dealing with a single value input, we deal with vectorized data. There are some systems that are optimized to process vector data such as GPU handling graphics or image pixel data. If you notice, this is similar to the large task divided into smaller logical sub tasks example that we indicated earlier.

is there a definition?

Concurrency and parallelism definition

While researching this topic I found the following definition:

- Concurrency refers to dealing with lots of things at the same time

- Parallel computing refers to doing more work by simultaneous activity

Let us try to compare that with our proposed criteria. In the first bullet, the keyword is dealing with many. In the second bullet, the keyword is doing more work. I think this definition is aligned with what we have said earlier. Dealing with many things is as managing multiple threads of execution and doing more work is as splitting a task into smaller logical sub tasks then distributing that on multiple cores or machines.

To get a better idea, let us take some examples…

Concurrency examples

- Opening multiple tabs in a web browser

- Downloading a file in background while updating GUI

Parallelism examples

- Distributed map reduce job (ex. counting lines in a file)

- Graphics computations using GPU (ex. converting image pixel data to a different format)

Summary

Here is a tabular summary of key differences between concurrency and parallelism:

| Concurrency vs Parallelism |

|---|

| Concurrency and parallelism are often used interchangeably but they refer to related but different concepts |

| Concurrency refers to independent (but related) tasks running at the same time |

| Concurrency is relevant when discussing thread access to shared resources |

| Parallelism refers to splitting a big task into smaller logical sub tasks and running these tasks on multiple cores or processors |

| Vector computing is also mentioned sometimes but it is in a way or another parallel computing |

References

- To get more insight about the topic I recommend that you read this article which compares multiprogramming, multitasking, multithreading and multiprocessing

Thanks for visiting. For questions and feedback, please use the comments section below.

More from my site

About Author

Mohammed Abualrob

Software Engineer @ Cisco